Parallel time integration using Batched BLAS (Basic Linear Algebra Subprograms) routines - ScienceDirect

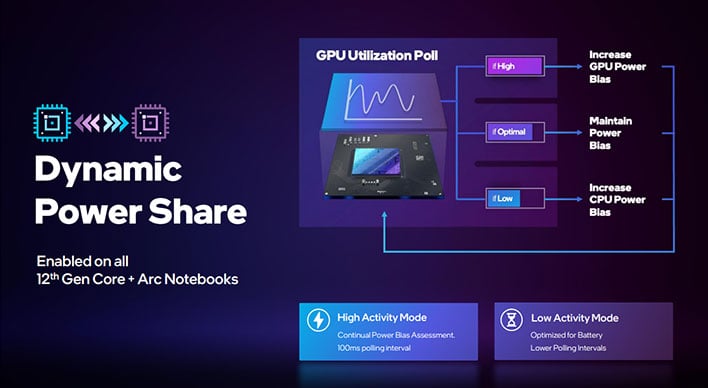

Intel Benchmarks Show Arc A770M Battling NVIDIA's GeForce RTX 3060 In Mobile GPU Showdown | HotHardware

![PDF] XKBlas: a High Performance Implementation of BLAS-3 Kernels on Multi- GPU Server | Semantic Scholar PDF] XKBlas: a High Performance Implementation of BLAS-3 Kernels on Multi- GPU Server | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/0ecd09a3025ebc09a989dc40c7361af78e8a6ee6/3-Figure2-1.png)

PDF] XKBlas: a High Performance Implementation of BLAS-3 Kernels on Multi- GPU Server | Semantic Scholar

Performance of level-one BLAS operations on multiple GPUs. Both axes... | Download Scientific Diagram